Build ML Model Containers, Automatically

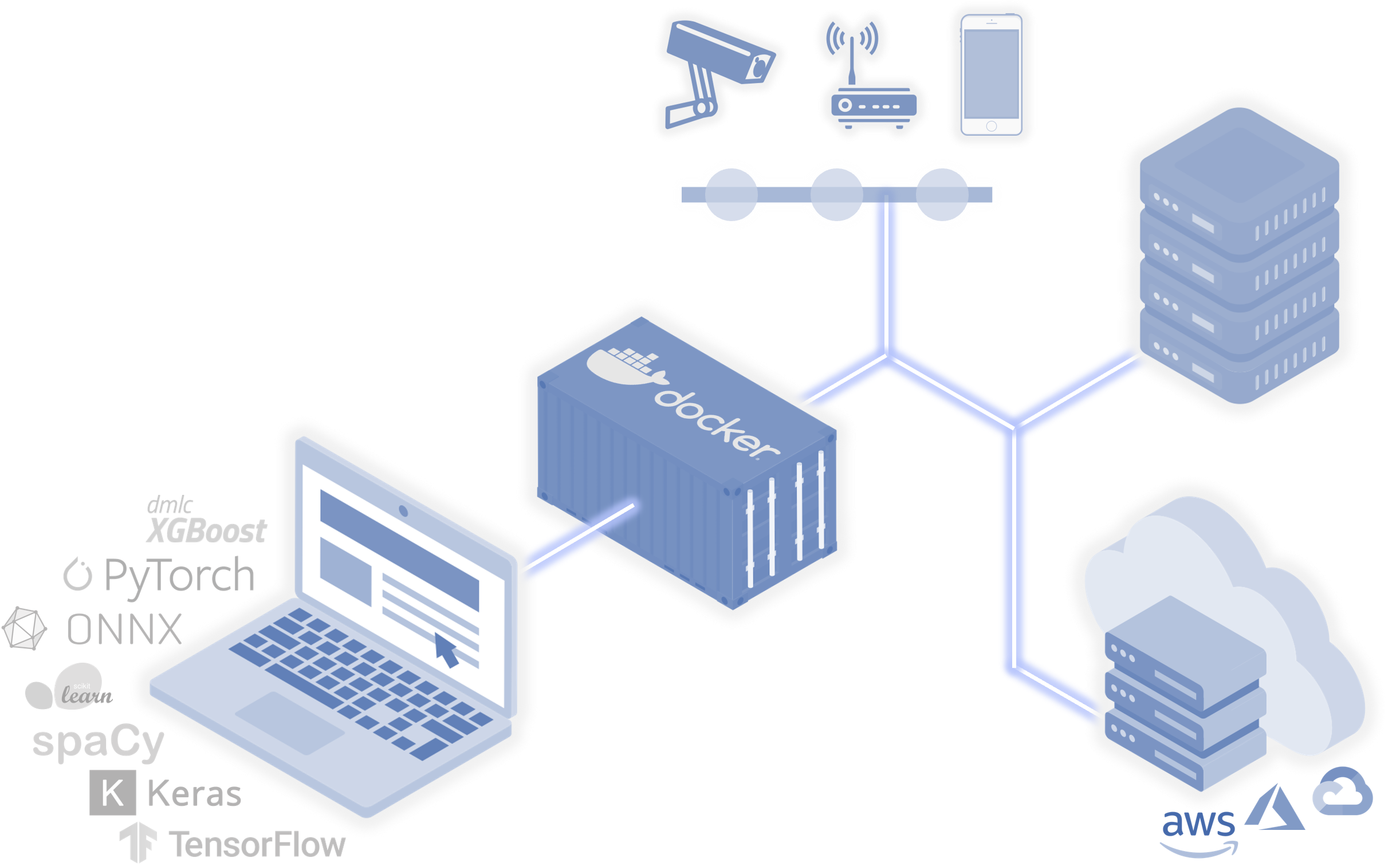

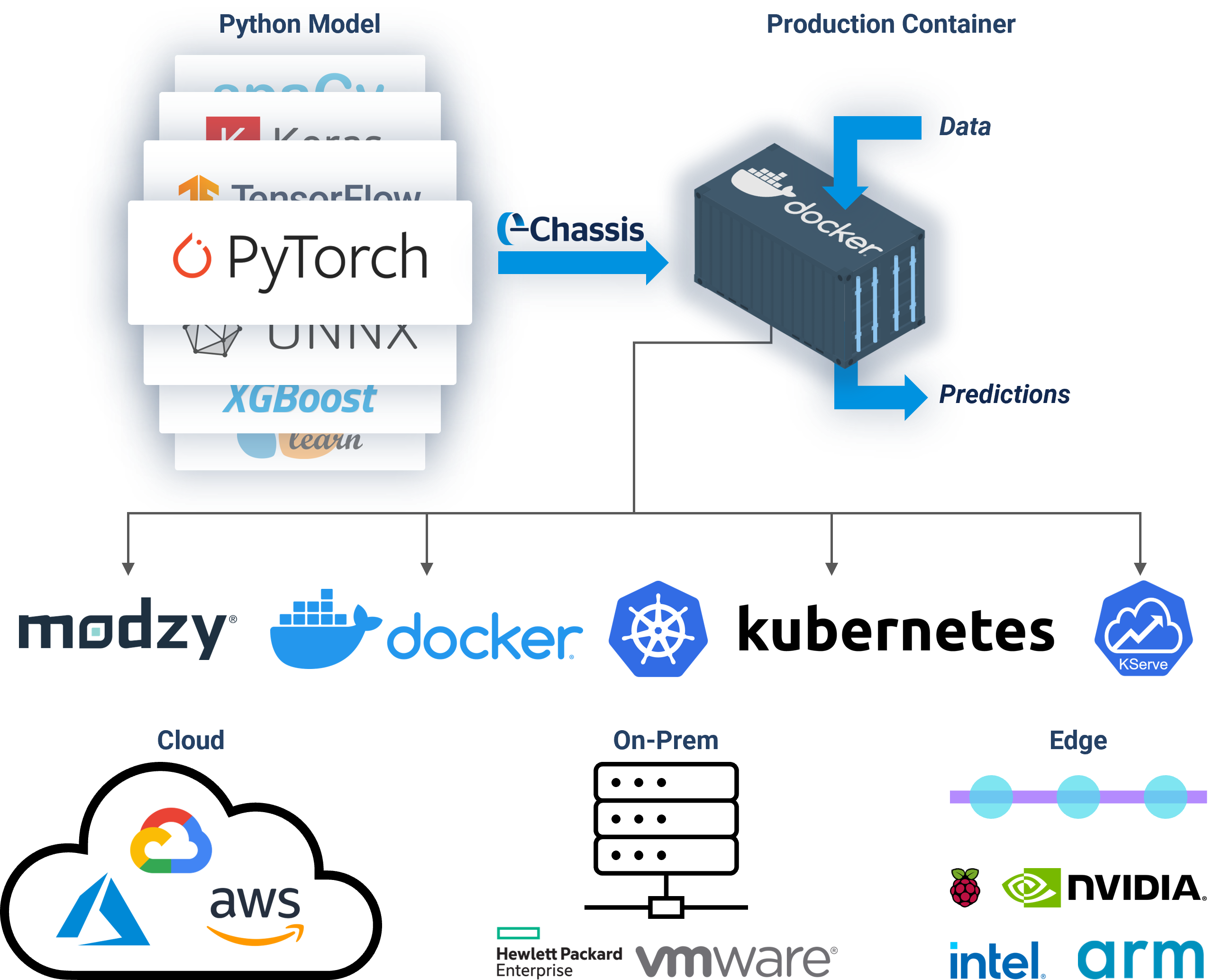

Package ML models into containerized prediction APIs in just minutes, and run them anywhere - in the Cloud, On-Prem, or at the Edge

Get Started Join Community

What is Chassis?

Chassis is an open-source project that automatically turns your ML models into production containers.

Chassis picks up right where your training code leaves off and builds containers for any target architecture. Execute a single Chassis job then run your containerized models in the cloud, on-prem, or on edge devices ( Raspberry Pi,

NVIDIA Jetson Nano,

Intel NUC, and others).

Benefits:

Turns models into containers, automatically

Creates easy-to-use prediction APIs

Builds containers locally on Docker or as a K8s service

Chassis containers run on Docker, containerd, Modzy, and more

Compiles for both x86 and ARM processors

Supports GPU batch processing

No missing dependencies, perfect for edge AI

Getting Started

Getting started with Chassis is as easy as installing a Python package and incorporating a few lines of code into your existing workflow. Follow these short steps to start building your first ML container in just minutes!

What you will need:

Docker: To leverage Chassis's local build option, you will need to make sure Docker is installed on your machine. If it is not already installed, follow the installation instructions specific to your OS here.

Chassis SDK: The Chassis Python package enables you to build model containers locally. Download via PyPi: pip install chassisml

Python model: Bring your model trained with your favorite Python ML framework (Scikit-learn, PyTorch, Tensorflow, or any framework you use!)

Get Started GitHub

from typing import Mapping

from chassisml import ChassisModel

from chassis.builder import DockerBuilder

# NOTE: The below code snippet is pseudo code that

# intends to demonstrate the general workflow when

# using Chassis and will not execute as is. Substitute

# with your own Python framework, any revelant utility

# methods, and syntax specific to your model.

import framework

import preprocess, postprocessmodel

# load model

model = framework.load("path/to/model/file")

# define predict function

def predict(input_data: Mapping[str, bytes]) -> dict[str, bytes]:

# preprocess data

data = preprocess(input_data['input'])

# perform inference

predictions = model.predict(data)

# process output

output = postprocess(predictions)

return {"results.json": output}

# create ChassisModel object

model = ChassisModel(predict)

builder = DockerBuilder(model)

# build container with local Docker option

build_response = builder.build_image(name="My First Chassis Model", tag="0.0.1")